Welcome to Hypervisor 101 in Rust

This is a day long course to quickly learn the inner working of hypervisors and techniques to write them for high-performance fuzzing.

This course covers foundation of hardware-assisted virtualization technologies, such as VMCS/VMCB, guest-host world switches, EPT/NPT, as well as useful features and techniques such as exception interception for virtual machine introspection for fuzzing.

The class is made up of lectures using the materials within this directory and hands-on exercises with source code under the Hypervisor-101-in-Rust/hypervisor directory.

This lecture materials are written for the gcc2023 branch, which notionally have incomplete code for step-by-step exercises. Check out the starting point of the branch as below to go over hands-on exercises before you start.

git checkout b17a59dd634a7b0c2b9a6d493fc9b0ff22dcfce5

Prerequisites

- Familiarity with x86_64 architecture

- Rust experience is helpful but not required

- Download the following specs

- Intel 64 and IA-32 Architectures Software Developer’s Manual Volume 3

- AMD64 Architecture Programmer’s Manual Volume 2: System Programming

- The material is based on

- Intel: Revision 78 (December 2022)

- AMD Revision 3.40 (January 2023)

The goals of this course

- Understand the low level implementation of the hardware-assisted virtualization technology on x86_64

- Familiarize techniques to apply the technology for high-performance fuzzing

Motivation

- Hypervisors are

- Cool

- Foundation of cloud-platforms: KVM (GCP), Hyper-V (Azure), Nitro Hypervisor (AWS), Oracle Cloud (Xen)

- Interesting

- Foundation of major security features: Virtualization Based Security, Secured-core PC (Platform Properties Assessment Module)

- Used in security research: BitVisor, Intel's Virtualization Based Hardening

- Convenient

- System-level isolation: VMware Workstation/Fusion, ACRN, Nitro Hypervisor, Qubes OS

- System-level inspection: Asahi Linux m1n1 Hypervisor, Cuckoo Sandbox, HVMI, kAFL/Nyx, Cheat Engine DBVM, Blue Pill, antivirus-hypervisors (eg, Avast Premium Security)

- Cool

- Understanding of essentials are important for anything with it

- Want to audit code?

- Want to customize them?

- Want to write your own ones?

- Want to join a team doing those?

How we achieve the goals

What I cannot create, I do not understand -- Richard Feynman

- Writing a fuzzing hypervisor from the ground

- Familiarizing yourselves with specifications

- Having references to relevant open source implementations

What you learn

- You will have a hypervisor that runs and fuzzes a target as a VM

- You will have a cross platform hypervisor development and debugging setup

- You will be able to extend implementation for your use cases

- You will be able to better navigate existing hypervisor code

- You will be prepared for writing your own custom hypervisors

Our fuzzer design

- Greybox fuzzing

- Mutation-based

- Edge coverage guided

- Snapshot-based

- Input: snapshot file, corpus files, patch file

Our hypervisor design

- Creates a VM from the snapshot

- Starts a fuzzing iteration with the VM, meaning:

- injecting mutated input into the VM's memory,

- letting the VM run, and

- observing possible bugs in the VM

- Reverts the VM at the end of each fuzzing iteration

- Runs as many VMs as the number of logical processors on the system

- Is a UEFI program in Rust

- Is tested on Bochs, VMware and select bare metal models

What this class is NOT

- Rust programming course

- Fuzzing course

- The Awesome Fuzzing discord server is a good place to seek suggestion if interested

- Learning usage or internals of any specific existing software

- Covering details that are not required for reading majority of provided code

- Thus, some descriptions may be over-simplified and not even accurate.

Demo: our hypervisor fuzzer

- Will show the hypervisor running on VMware and fuzzing the target

Why hypervisor for fuzzing

- Advantages:

- Fuzzing targets are not limited to user-mode

- Substantially faster than emulators

- Examples

- Customized hypervisors: KF/x (Xen), kAFL/Nyx (KVM), HyperFuzzer (Hyper-V)

- Using hypervisor API: What The Fuzz, Rewind, Hyperpom, Snapchange

- Original hypervisors: FalkVisor, Barbervisor

UEFI applications

- UEFI is a pre-OS environment, aka, BIOS

- Our execution phase (called "DXE") is:

- single threaded; no thread or process

- ring-0 and long-mode

- single flat address space

- Why

- Less waste of system resources

- OS agnostic deigns and dev environment

- No need to worry about compatibility with OS

- Easy to access hardware features

- Well documented API

- Why would we need an OS?

Rust🦀

A language empowering everyone to build reliable and efficient software

- Why

- Why not?

- Absolutely more productive than C/C++ for writing UEFI programs

- excellent ranges of libraries (core-library and crates)

- compiler enforced safe guards, preventing bugs

- As a security minded engineer, you should stop writing new code in C/C++

Classification of hypervisors

- Software-based: Paravirtualization (Xen), binary-translation and emulation (old VMware)

- Hardware-assisted: Uses hardware assisted virtualization technology, HW VT, eg, VT-x and AMD-V (almost any hypervisors)

- vs. emulators/simulators

- Hypervisors run code on real processors (direct execution) plus minimal use of emulation techniques

- Emulators emulate everything; thus, slower

- Many emulators now use HW VT to boost performance: QEMU + KVM, Android Emulator + HAXM, Simics + VMP

- "Hypervisor" is most often interchangeable with "VMM"

- This class will explicitly talk about Hardware-assisted hypervisors

Hypervisor vs host, VM vs guest

- Those are interchangeable

- When HW VT is in use, a processor runs in one of two modes:

- host-mode: the same as usual + a few HW VT related instructions are usable.

- Intel: VMX root operation

24.3 INTRODUCTION TO VMX OPERATION

(...) Processor behavior in VMX root operation is very much as it is outside VMX operation.

- AMD: (even does not defined the term)

- Intel: VMX root operation

- guest-mode: the restricted mode where some operations are intercepted by a hypervisor

- Intel: VMX non-root operation

24.3 INTRODUCTION TO VMX OPERATION

(...) Processor behavior in VMX non-root operation is restricted and modified to facilitate virtualization. Instead of their ordinary operation, certain instructions (...) and events cause VM exits to the VMM.

- AMD: Guest-mode

15.2.2 Guest Mode

This new processor mode is entered through the VMRUN instruction. When in guest-mode, the behavior of some x86 instructions changes to facilitate virtualization.

- Intel: VMX non-root operation

- host-mode: the same as usual + a few HW VT related instructions are usable.

- The execution context in the host-mode == hypervisor == host

- The execution context in the guest-mode == VM == guest

- Intel: 📖24.2 VIRTUAL MACHINE ARCHITECTURE

- AMD: 📖15.1 The Virtual Machine Monitor

- NB: those are based on the specs. In context of particular software/product architecture, those terms can be used with different definitions

- This "mode" is an orthogonal concept to the ring-level

- For example, ring-0 guest-mode and ring-3 host-mode are possible and normal

Types of hypervisors

- Robert P. Goldberg coined VMM architecture types:

- Type I or bare metal: The VMM runs on a bare machine.

- Type II or hosted: The VMM runs on an extended host, under the host operating system.

- Can be understood as:

- Type 1: No OS runs in the host-mode.

CPUIDinstructions ran by your OS would be intercepted by a hypervisor.- Hyper-V, Xen: even "admin OS" runs in the guest-mode

- VMware ESXi, BitVisor: no "admin OS"

- CheatEngine, BluePill, antivirus-hypervisor: turns the current OS into guest-mode

- Type 2: A general purpose OS runs in the host-mode.

CPUIDinstructions ran by your OS would not be intercepted.- KVM, VirtualBox, VMware Workstation: works as a kernel extension. The host OS runs in the host-mode

- Type 1: No OS runs in the host-mode.

- Our hypervisor is type-1 (no admin OS)

Hypervisor setup and operation cycle

This chapter explains how hypervisor sets up and runs a guest and introduces our first few hands-on exercises.

Hypervisor setup and operation cycle

- Enable: System software enables HW VT and becomes a hypervisor

- Set up: The hypervisor creates and sets up a "context structure" representing a guest

- Switch to: The hypervisor asks the processor to load the context structure into hardware-registers and start running in guest-mode

- Return from: The processor switches back to the host-mode on certain events in the guest-mode

- Handle: The hypervisor typically emulates the event and does (3), repeating the process.

(1) Enable: System software enables HW VT and becomes a hypervisor

-

HW VT is implemented as an Instruction Set Architecture (ISA) extension

- Intel: Virtual Machine Extensions (VMX); branded as VT-x

- AMD: Secure Virtual Machine (SVM) extension; branded as AMD-V

-

Steps to enter the host-mode from the traditional mode:

- Enable the feature (Intel:

CR4.VMXE=1 / AMD:IA32_EFER.SVME=1) - (Intel-only) Execute the

VMXONinstruction

- Enable the feature (Intel:

-

Platform specific mode names

Host-mode Guest-mode Intel VMX root operation VMX non-root operation AMD (Not named) Guest-mode

(2) Set up: The hypervisor creates and sets up a "context structure" representing a guest

- Each guest is represented by a 4KB structure

- Intel: virtual-machine control structure (VMCS)

- AMD: virtual machine control block (VMCB)

- It contains fields describing:

- Guest configurations such as register values

- Behaviour of HW VT such as what instructions to intercept

- More details later

(3) Switch to: The hypervisor asks the processor to load the context structure into hardware-registers and start running in guest-mode

- Execution of a special instruction triggers switching to the guest-mode

- Intel:

VMLAUNCHorVMRESUME - AMD:

VMRUN

- Intel:

- Successful execution of it:

- saves current register values into a host-state area

- loads register values from the context structure, including

RIP - changes the processor mode to the guest-mode

- starts execution

- A host-state area is:

- Intel: part of VMCS (host state fields)

- AMD: separate 4KB block of memory specified by an MSR 📖15.30.4 VM_HSAVE_PA MSR (C001_0117h)

- This host-to-guest-mode transition is called:

- Intel: VM-entry 📖CHAPTER 27 VM ENTRIES

- AMD: World switch to guest 📖15.5.1 Basic Operation

(4) Return from: The processor switches back to the host-mode on certain events in the guest-mode

- Certain events are intercepted by the hypervisor

- On that event, the processor:

- saves the current register values into the context structure

- loads the previously saved register values from a host-state area

- changes the processor mode to the host-mode

- starts execution

- This guest-to-host-mode transition is called:

- Intel: VM-exit 📖CHAPTER 28 VM EXITS

- AMD: #VMEXIT 📖15.6 #VMEXIT

- We call it as "VM exit"

- Note that guest uses actual registers and actually runs instructions on a processor.

- There is no "virtual register" or "virtual processor".

- HW VT is a mechanism to perform world switches, ie, changing actual register values.

- Akin to task/process context switching:

- VMCS/VMCB = "task/process" struct

VMLAUNCH/VMRESUME/VMRUN= context switch to a task- VM-exit/#VMEXIT = preempting the task

- Akin to task/process context switching:

(5) Handle: The hypervisor typically emulates the event and does (3), repeating the process

- Hypervisor determines the cause of VM exit from the context structure

- Intel: Exit reason field 📖28.2 RECORDING VM-EXIT INFORMATION AND UPDATING VM-ENTRY CONTROL FIELDS

- AMD: EXITCODE field 📖15.6 #VMEXIT

- Hypervisor emulates the event on behalf of the guest

- eg, for

CPUID- inspects guest's

EAXandECXas input - updates guest's

EAX,EBX,ECX,EDXas output - updates guest's

RIP

- inspects guest's

- eg, for

- Hypervisor switches to the guest with

VMRESUME/VMRUN

Our goals and exercises in this chapter

- E#1: Enable HW VT

- E#2: Configure behaviour of HW VT

- E#3: Set up guest state based on a snapshot file

- Start the guest

Testing with Bochs

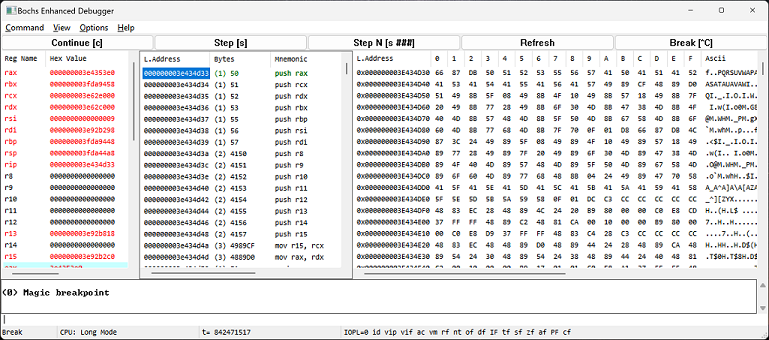

- Bochs is a cross-platform open-source x86_64 PC emulator

- Extremely helpful in an early-phase of hypervisor development

- Capable of emulating both VMX and SVM, even on ARM-based systems

- Most importantly, you can debug failure of an instruction

- for example, error log on the failure of

VMLAUNCH[CPU0 ]e| VMFAIL: VMCS host state invalid CR0 0x00000000

- for example, error log on the failure of

- Built-in debugger

- GUI (Windows-only)

- CLI

h|help - show list of debugger commands h|help command - show short command description -*- Debugger control -*- help, q|quit|exit, set, instrument, show, trace, trace-reg, trace-mem, u|disasm, ldsym, slist, addlyt, remlyt, lyt, source -*- Execution control -*- c|cont|continue, s|step, p|n|next, modebp, vmexitbp -*- Breakpoint management -*- vb|vbreak, lb|lbreak, pb|pbreak|b|break, sb, sba, blist, bpe, bpd, d|del|delete, watch, unwatch -*- CPU and memory contents -*- x, xp, setpmem, writemem, loadmem, crc, info, deref, r|reg|regs|registers, fp|fpu, mmx, sse, sreg, dreg, creg, page, set, ptime, print-stack, bt, print-string, ?|calc -*- Working with bochs param tree -*- show "param", restore

- GUI (Windows-only)

- Good idea to start with Bochs, then VMware. Last but not least, bare metal

Exercise preparation: Building and running the hypervisor, and navigating code

- You should choose a platform to work on: Intel or AMD.

- Intel: Use the

rust: cargo xtask bochs-inteltask - AMD: Use the

rust: cargo xtask bochs-amdtask

- Intel: Use the

- We assume you are able to:

- jump to definitions with F12 on VSCode

- build the hypervisor

- run it on Bochs. Output should look like this:

INFO: Starting the hypervisor on CPU#0 ... ERROR: panicked at 'not yet implemented: E#1-1', hypervisor/src/hardware_vt/svm.rs:49:9 - If not, follow the instructions in BUILDING

- Primary code flow for the exercises in this chapter

#![allow(unused)] fn main() { // main.rs efi_main() { start_hypervisor_on_all_processors() { start_hypervisor() } } //hypervisor.rs start_hypervisor() { vm.vt.enable() // E#1: Enable HW VT vm.vt.initialize() // E#2: Configure behaviour of HW VT start_vm() { vm.vt.revert_registers() // E#3: Set up guest state based on a snapshot file loop { // Runs the guest until VM exit exit_reason = vm.vt.run() // ... (Handles the VM exit) } } } }

Exercise preparation: Code annotations and solutions

- All exercises can be found by searching

E#under thehypervisordirectory.- One exercise may require updates in multiple locations. For example,

E#1-1andE#1-2are a single exercise, and you must complete both to observe an expected result. - Some exercises are in both

vmx.rsandsvm.rsfiles. Based on your preference of the platform, you can chose one and ignore the other in this case.

- One exercise may require updates in multiple locations. For example,

- A solution of each exercise will be pushed to the github repo as a commit.

E#1: Enabling VMX/SVM

- Intel: Enable VMX by

CR4.VMXE=1. Then, enter VMX root operation with theVMXONinstruction. - AMD: Enable SVM by

IA32_EFER.SVME=1 - Expected result: panic at E#2.

INFO: Starting the hypervisor on CPU#0 ... ERROR: panicked at 'not yet implemented: E#2-1', hypervisor/src/hardware_vt/svm.rs:73:9

E#2: Settings up the VMCS/VMCB

- Intel:

- VMCS is already allocated as

self.vmcs_region - VMCS is read and written only through the

VMREAD/VMWRITEinstructions - The layout of VMCS is undefined. Instead,

VMREAD/VMWRITEtake "encoding" (ie, field ID) to specify which field to access- 📖APPENDIX B FIELD ENCODING IN VMCS

- VMCS needs to be "clear", "active" and "current" to be accessed with

VMREAD/VMWRITE- (E#2-1, 2-2) Use

VMCLEARandVMPTRLDto put a VMCS into this state

- (E#2-1, 2-2) Use

- VMCS contains host state fields.

- On VM-exit, processor state is updated based on the host state fields

- (E#2-3) Program them with current register values

- VMCS is already allocated as

- AMD:

- VMCB is already allocated as

self.vmcb - VMCB is read and written through usual memory access.

- The layout of VMCB is defined.

- 📖Appendix B Layout of VMCB

- VMCB does NOT contain host state fields.

- Instead, another 4KB memory block, called host state area, is used to save host state on

VMRUN - On #VMEXIT, processor state is updated based on the host state area

- (E#2-1) Write the address of the area to the

VM_HSAVE_PAMSR. The host state area is allocated asself.host_state.

- Instead, another 4KB memory block, called host state area, is used to save host state on

- VMCB is already allocated as

- Expected result: panic at E#3.

INFO: Entering the fuzzing loop🐇 ERROR: panicked at 'not yet implemented: E#3-1', hypervisor/src/hardware_vt/svm.rs:176:9

E#3: Configuring guest state in the VMCS/VMCB

- Guest state is managed by:

- Intel: guest state fields in VMCS

- AMD: state save area in VMCB

- (E#3-1) Configure a guest based on the snapshot

- Some registers are not updated as part of world switch by hardware

- General purpose registers (GPRs) are examples

- (E#3-2) Initialize guest GPRs. They need to be manually saved and loaded by software

- Expected output: "physical address not available" error

- Intel

[CPU0 ]i| | RIP=000000000dd24e73 (000000000dd24e73) ... (0).[707060553] ??? (physical address not available) ... ERROR: panicked at '🐛 Non continuable VM exit 0x2', hypervisor\src\hypervisor.rs:126:17 - AMD

[CPU0 ]i| | RIP=000000000dd24e73 (000000000dd24e73) ... [CPU0 ]p| >>PANIC<< exception(): 3rd (14) exception with no resolution [CPU0 ]e| WARNING: Any simulation after this point is completely bogus ! (0).[698607907] ??? (physical address not available) ... (after pressing the enter key) ... ERROR: panicked at '🐛 Non continuable VM exit 0x7f', hypervisor\src\hypervisor.rs:126:17

- Intel

- 🎉Notice that we did receive VM exit, meaning we successfully switched to guest-mode

Deeper look into guest-mode transition

-

Switching to the guest-mode

- Intel

VMLAUNCH- used for first transition with the VMCSVMRESUME- used for subsequent transitions

- AMD:

VMRUN

- Intel

-

Our implementation:

AMD: run_vm_svm()Intel: run_vm_vmx()1 Save host GPRs into stack Save host GPRs into stack 2 Load guest GPRs from memory Load guest GPRs from memory 3 VMRUNif launched { VMRESUME} else { set up hostRIPandRSP, thenVMLAUNCH}4 Save guest GPRs into memory Save guest GPRs into memory 5 Load host GPRs from stack Load host GPRs from stack -

Contents of the GPRs are manually switched, because the

VMRUN,VMLAUNCH,VMRESUMEinstructions do not do it

Causes of VM exits

- Intel: 📖Table C-1. Basic Exit Reasons

- Many of these are optional, but some are always enabled, eg,

CPUIDVM-exit - 📖26.1 INSTRUCTIONS THAT CAUSE VM EXITS

- 📖26.2 OTHER CAUSES OF VM EXITS

- Many of these are optional, but some are always enabled, eg,

- AMD: 📖Appendix C SVM Intercept Exit Codes

- All except

VMEXIT_INVALIDare optional

- All except

Another hypervisor design: Deprivileging current execution context

- We start a guest as a completely separate execution context

- Alternatively, a hypervisor can also start a guest based on the current execution context by capturing current register values and setting them into the guest state fields

- This way, the current system runs on the guest-mode, and a hypervisor intercepts system's operations

- Type-1 hypervisors do this

- Common for hypervisors that intend to deeply interact with the OS, eg, as a hypervisor debugger, rootkit, or security enhancement

Memory virtualization

This chapter looks into hardware features to virtualize memory access from a guest, namely, nested paging.

Memory virtualization

- We saw

physical address not available

- Memory is not virtualized for the guest

- When the guest translates VA to PA using the guest

CR3- as-is

- the translated PA is used to access physical memory

- the guest could read and write any memory (including hypervisor's or other guests' memory)

- In our case, a PA the guest attempted to access was not available

- with memory virtualization:

- the translated PA is again translated using hypervisor managed mapping

- the hypervisor can prevent guest from accessing hypervisor's or other guests' memory

- as-is

Terminologies

- In this material:

- Nested paging - the address translation mechanism used during the guest-mode when...

- Intel: EPT is enabled

- AMD: Nested paging (also referred to as Rapid Virtualization Indexing, RVI) is enabled

- Nested paging is also referred to as second level address translation (SLAT)

- Nested paging structures - the data structures describing translation with nested paging

- Intel: Extended page table(s)

- AMD: Nested page tables(s)

- Nested page fault - translation fault at the nested paging level

- Intel: EPT violation

- AMD: #VMEXIT(NPF)

- (Nested) paging structure entry - an entry of any of (nested) paging structures.

- Do not be confused with a "(nested) page table entry" which is "an entry of the (nested) page table" specifically

- Virtual address (VA) - same as "linear address" in the Intel manual

- Physical address (PA) - an effective address to be sent to the memory controller for memory access. Same as "system physical address" in the AMD manual

- Guest physical address (GPA) - an intermediate form of an address used when nested paging is enabled (more on later)

x64 traditional paging

- VA -> PA translation

- When a processor needs to access a given VA,

va, the processor does the following to translate it to a PA:- Locates the top level paging structure, PML4, from the value in the

CR3register - Indexes the PML4 using

va[47:39]as an index - Locates the next level paging structure, PDPT, from the indexed PML4 entry

- Indexes the PDPT using

va[38:30]as an index - Locates the next level paging structure, PD, from the indexed PDPT entry

- Indexes the PD using

va[29:21]as an index - Locates the next level paging structure, PT, from the indexed PD entry

- Indexes the PT using

va[20:12]as an index - Finds out a page frame to translate to, from the indexed PT entry

- Combines the page frame and

va[11:0], resulting in a PA

- Locates the top level paging structure, PML4, from the value in the

- In pseudo code, it looks like this:

# Translate VA to PA def translate_va(va): i4, i3, i2, i1, page_offset = get_indexes(va) pml4 = cr3() pdpt = pml4[i4] pd = pdpt[i3] pt = pd[i2] page_frame = pt[i1] return page_frame | page_offset # Get indexes and a page offset from the given address def get_indexes(address): i4 = (address >> 39) & 0b111_111_111 i3 = (address >> 30) & 0b111_111_111 i2 = (address >> 21) & 0b111_111_111 i1 = (address >> 12) & 0b111_111_111 page_offset = address & 0b111_111_111_111 return i4, i3, i2, i1, page_offset - Intel: 📖Figure 4-8. Linear-Address Translation to a 4-KByte Page using 4-Level Paging

- AMD: 📖Figure 5-17. 4-Kbyte Page Translation-Long Mode 4-Level Paging

Nested paging

- VA -> GPA -> PA translation

- When a processor needs to access a given VA, the processor does the following to translate it to a PA, if nested paging is enabled and the processor is in the guest-mode:

- Performs all of the 10 steps in the previous page, using a guest

CR3register - Treats the resulted value as GPA, instead of PA

- Locates the top level nested paging structure, nested PML4, from the value in an EPT pointer (Intel) or nCR3 (AMD)

- Indexes the nested PML4 using

GPA[47:39]as an index - Locates the next level nested paging structure, nested PDPT, from the indexed nested PML4 entry

- Indexes the nested PDPT using

GPA[38:30]as an index - Locates the next level nested paging structure, nested PD, from the indexed nested PDPT entry

- Indexes the nested PD using

GPA[29:21]as an index - Locates the next level nested paging structure, nested PT, from the indexed nested PD entry

- Indexes the nested PT using

GPA[20:12]as an index - Finds out a page frame to translate to, from the indexed nested PT entry

- Combines the page frame and

GPA[11:0], resulting in a PA

- Performs all of the 10 steps in the previous page, using a guest

- In pseudo code, it would look like this:

# Translate VA to PA with nested paging def translate_va_during_guest_mode(va): gpa = translate_va(va) # may raise #PF return translate_gpa(gpa) # may cause VM exit # Translate VA to (G)PA def translate_va(va): # Omitted. See the previous page # Translate GPA to PA def translate_gpa(gpa): i4, i3, i2, i1, page_offset = get_indexes(gpa) nested_pml4 = read_vmcs(EPT_POINTER) if intel else VMCB.ControlArea.nCR3 nested_pdpt = nested_pml4[i4] nested_pd = nested_pdpt[i3] nested_pt = nested_pd[i2] page_frame = nested_pt[i1] return page_frame | page_offset # Get indexes and a page offset from the given address def get_indexes(address): # Omitted. See the previous page - Intel: 📖29.3.2 EPT Translation Mechanism

- AMD: 📖15.25.5 Nested Table Walk

Relation to hypervisor

- Nested paging applies only when the processor is in the guest-mode

- After VM exit, hypervisor runs under traditional paging using the current (host)

CR3

- After VM exit, hypervisor runs under traditional paging using the current (host)

- Nested paging is two-phased

- First translation (VA -> GPA) is done exclusively based on guest controlled data (

CR3and paging structures in guest accessible memory)- Failure in this phase results in #PF, which is handled exclusively by the guest using guest IDT

- Second translation (GPA -> PA) is done exclusively based on hypervisor controlled data (EPT pointer/nCR3 and nested paging structures in guest inaccessible memory)

- Failure in this phase results in VM exit, which is handled exclusively by the hypervisor

- AMD: 📖15.25.6 Nested versus Guest Page Faults, Fault Ordering

- First translation (VA -> GPA) is done exclusively based on guest controlled data (

Nested page fault

-

Translation failure with nested paging structures causes VM exit

- Intel: EPT violation (and few more 📖29.3.3 EPT-Induced VM Exits)

- AMD: #VMEXIT(NPF)

-

Few read-only fields are updated with the details of a failure

Fault reasons GPA tried to translate VA tried to translate Intel Exit qualification Guest-physical address Guest linear address AMD EXITINFO1 EXITINFO2 Not Available - Intel: 📖Table 28-7. Exit Qualification for EPT Violations

- AMD: 📖15.25.6 Nested versus Guest Page Faults, Fault Ordering

-

Typical actions by a hypervisor

- update nested paging structure(s) and let the guest retry the same operation

- may require TLB invalidation, like an OS has to when it changes paging structures

- inject an exception to the guest to prevent access

- update nested paging structure(s) and let the guest retry the same operation

Nested paging structure entries

- Comparable to the traditional paging structure entries

- bit[11:0] = flags including page permissions

- bit[N:12] = either a PA of the next nested paging structure, or a page frame to translate to

- Intel: Similar to traditional paging structure entries but different

- 📖Figure 29-1. Formats of EPTP and EPT Paging-Structure Entries

- taking 4KB translation as an example

- 📖Table 29-1. Format of an EPT PML4 Entry (PML4E) that References an EPT Page-Directory-Pointer Table

- 📖Table 29-3. Format of an EPT Page-Directory-Pointer-Table Entry (PDPTE) that References an EPT Page Directory

- 📖Table 29-5. Format of an EPT Page-Directory Entry (PDE) that References an EPT Page Table

- 📖Table 29-6. Format of an EPT Page-Table Entry that Maps a 4-KByte Page

- AMD: Exactly the same as traditional paging structure entries

- taking 4KB translation as an example

- 📖Figure 5-20. 4-Kbyte PML4E-Long Mode

- 📖Figure 5-21. 4-Kbyte PDPE-Long Mode

- 📖Figure 5-22. 4-Kbyte PDE-Long Mode

- 📖Figure 5-23. 4-Kbyte PTE-Long Mode

- taking 4KB translation as an example

10000 feet-view comparison of traditional and nested paging

| Traditional paging | Intel nested paging | AMD nested paging | |

|---|---|---|---|

| Translation for | VA -> PA (or GPA) | GPA -> PA | GPA -> PA |

| Typically owned and handled by | OS (or guest OS) | Hypervisor | Hypervisor |

| Pointer to the PML4 | CR3 | EPT pointer | nCR3 |

| Translation failure | #PF | EPT violation VM-exit | #VMEXIT(NPF) |

| Paging structures compared with traditional ones | (N/A) | Similar | Identical |

| Bit[11:0] of a paging structure entry is | Flags | Flags | Flags |

| Bit[N:12] of a paging structure entry contains | Page frame | Page frame | Page frame |

| Levels of tables for 4KB translation | 4 | 4 | 4 |

Our goals and exercises in this chapter

- E#4: Enable nested paging with empty nested paging structures

- Build up nested paging structures on nested page fault as required, which includes:

- Handling and normalizing EPT violation and #VMEXIT(NPF)

- Resolving a PA of a snapshot-backed-page that maps the GPA that caused fault

- E#5: Building nested paging structures for GPA -> PA translation

- E#6: Implement copy-on-write and fast memory revert mechanism

E#4 Enabling nested paging

- Intel:

- Set bit[1] of the secondary processor-based VM-execution controls.

- Set the EPT pointer VMCS to point EPT PML4.

- 📖Table 25-7. Definitions of Secondary Processor-Based VM-Execution Controls

- 📖25.6.11 Extended-Page-Table Pointer (EPTP)

- AMD:

- Set bit[0] of offset 0x90 (NP_ENABLE bit).

- Set the N_CR3 field (offset 0xb8) to point nested PML4.

- 📖15.25.3 Enabling Nested Paging

- Expected output: should see normalized VM exit due to

missing_translation: trueTRACE: NestedPageFaultQualification { rip: dd24e73, gpa: ff77000, missing_translation: true, write_access: true } ERROR: panicked at 'not yet implemented: E#5-1', hypervisor\src\hypervisor.rs:172:9

E#5 Building nested paging structures and GPA -> PA translation

handle_nested_page_fault()is called on nested page fault with details of the faultresolve_pa_for_gpa()returns a PA to translate to, for the given GPAbuild_translation()should update nested paging structures to translate given GPA to PA- Essentially, walking nested paging structures as processors do and updating entries needed for completing translation

- Expected output: It kind of works! Should see repeating fuzzing iterations🤩

TRACE: NestedPageFaultQualification { rip: efe1d20, gpa: efe41b8, missing_translation: true, write_access: false } ... Console output disabled. Enable the `stdout_stats_report` feature if desired. INFO: HH:MM:SS, Run#, Dirty Page#, New BB#, Total TSC, Guest TSC, VM-exit#, INFO: 08:15:34, 1, 0, 0, 1017837505, 7957016, 443, ... INFO: 08:16:41, 3, 0, 0, 200828781, 200817997, 133, DEBUG: Hang detected : "input_3.png" #2 (bit 1 at offset 0 bytes) INFO: 08:17:32, 4, 0, 0, 200821817, 200811033, 133, DEBUG: Hang detected : "input_3.png" #3 (bit 2 at offset 0 bytes) INFO: 08:18:22, 5, 0, 0, 200829433, 200818649, 133, DEBUG: Hang detected : "input_3.png" #4 (bit 3 at offset 0 bytes) INFO: 08:19:12, 6, 0, 0, 200833797, 200817997, 133, DEBUG: Hang detected : "input_3.png" #5 (bit 4 at offset 0 bytes)- Intel: the 2nd iteration may show

ERROR: 🐈 Unhandled VM exit 0xa - But something is not right. Each run causes

Hang detectedand is extremely slow

- Intel: the 2nd iteration may show

E#6 Implement copy-on-write and fast memory revert mechanism

- Problem 1: Memory modified by a previous iteration remains modified for a next iteration

- The hypervisor needs to revert memory, not just registers

- Problem 2: Memory modified by one guest is also visible from other guests, because snapshot-backed memory is shared

- The hypervisor needs to isolate an effect of memory modification to the current guest

- Solution: Copy-on-write with nested paging

- (E#6-1) Initially set all pages non-writable through nested paging

- (E#6-2) On nested page fault due to write-access

- change translation to point to non-shared physical address (called "dirty page"),

- make it writable,

- keep track of those modified nested paging structure entries, and

- copy contents of original memory into the dirty page

- At the end of each fuzzing iteration, revert the all modified nested paging structure entries

- Before triggering copy-on-write

[CPU#0] -> [NPSs#0] --\ +- Read-only ---------> [Shared memory backed by snapshot] [CPU#1] -> [NPSs#1] --/ - After triggering copy-on-write

[CPU#0] -> [NPSs#0] ----- Readable/Writable --> [Private memory] +- Read-only ----------> [Shared memory backed by snapshot] [CPU#1] -> [NPSs#1] --/ - Expected result: No more

Hang detected, andDirty Page#starts showing numbers🔥INFO: HH:MM:SS, Run#, Dirty Page#, New BB#, Total TSC, Guest TSC, VM-exit#, INFO: 09:31:09, 1, 131, 0, 916693934, 7982832, 455, ... INFO: 09:32:22, 297, 30, 0, 803898, 450754, 41, INFO: 09:32:25, 298, 131, 0, 8902656, 7919091, 329,

Advanced topics

- Cache management (ie, TLB invalidation)

- Memory types and virtualizing them

- Advanced features: MBEC/GMET, HLAT, DMA protection

VM introspection for fuzzing

This chapter explores useful features and techniques for virtual machine introspection for fuzzing.

Problem 1: Unnecessary code execution

- The guest continues to run even after the target function finishes

- Our snapshot is taken immediately after the call to

egDecodeAny()as below- No reason to run

FreePool()and the subsequent code

EG_IMAGE* egLoadImage(EFI_FILE* BaseDir, CHAR16 *FileName, BOOLEAN WantAlpha) { // ... egLoadFile(BaseDir, FileName, &FileData, &FileDataLength) newImage = egDecodeAny(FileData, FileDataLength, 128, WantAlpha); FreePool(FileData); return newImage; } - No reason to run

- Can we abort the guest when

egDecodeAny()returns?

Introduction to patching

- There may not be any VM exit on return, but we can replace code with something that can cause VM exit

- For example, code can be replaced with the

UDinstruction, which causes #UD, which can be intercepted as VM exit

- For example, code can be replaced with the

- The hypervisor can modify memory when paging it in from the snapshot

Our Design

- Prepare a patch file, which contains "where" and "with what byte(s) to replace"

- In our case, the patch file describes a patch for the return address of

egDecodeAny()with theUDinstruction

- In our case, the patch file describes a patch for the return address of

- When starting the hypervisor, an user specifies the patch file through a command line parameter

- On nested page fault, the hypervisor applies the patch if a page being paged-in is listed in the patch file

- The guest will execute the modified code

- The hypervisor intercepts #UD as VM exit using exception interception (more on later)

Demo: end marker

- Will show:

- The patch file

tests/samples/snapshot_patch_end_marker.json - The patch location with a disassembler

- Updating

tests/startup.nshto supply the patch file - Running the hypervisor and comparing speed

- One fuzzing iteration completes about x3 faster in some cases

- Before the change

INFO: 12:58:06, 2, 30, 0, 28944596, 421124, 43, ... INFO: 12:58:35, 302, 131, 0, 8229261, 7333745, 206, - After the change

INFO: 13:13:30, 2, 6, 0, 98006, 1634, 7, ... INFO: 13:13:40, 302, 128, 0, 8163017, 7315310, 200,

- The patch file

Exception interception

- By default, exception happens within the guest-mode is processed entirely within the guest

- Delivered through current (guest) IDT

- Processed by the guest OS

- A hypervisor can optionally intercept them as VM exits

- Intel: Exception Bitmap VMCS 📖25.6.3 Exception Bitmap

- AMD: Intercept exception vectors (offset 0x8) 📖15.12 Exception Intercepts

- To enable, set the bits that correspond to exception numbers you want to intercept, eg set

1 << 0xeto intercept #PF (0xe)

Exception handling

-

On VM exit, read-only fields in VMCS/VMCB are updated with details of exception

Exception Number Error Code (if exists) Intel VM-exit interruption information VM-exit interruption error code AMD EXITCODE EXITINFO1 - Intel: 📖25.9.2 Information for VM Exits Due to Vectored Events

- AMD: 📖15.12 Exception Intercepts

-

The hypervisor can inject exception to deliver what would have been delivered to the guest

- Intel: 📖27.6 EVENT INJECTION

- AMD: 📖15.20 Event Injection

-

In our case, we will abort the current fuzzing iteration and revering the guest state

Our goals and exercises in this chapter

- E#7 Enabling #UD interception for performance improvement

- E#8 Enabling #BP interception for coverage tracking

E#7 Enabling #UD exception interception

- Change

tests/startup.nshto usesnapshot_patch_end_marker.json - Expected result: Each iteration shows

Reached the end marker(and completes faster).

Problem 2: Cannot tell efficacy of corpus and mutation

- Did we discover new execution path? No, no feedback at all.

- Did we find a bug? No, no signal at all.

Basic-block coverage tracking through patches

- Idea

- Patch the beginning of every basic block of a target and trigger VM exit when a guest executes them

- When such VM exit occurs, remove the patch (replace a byte with an original byte) so that future execution does not cause VM exit

- VM exit due to the patch == execution of a new basic block == good input

- Implemented in a variety of fuzzers, eg, mesos, ImageIO, Hyntrospect, what the fuzz, KF/x

- Some of other ideas explained in the Putting the Hype in Hypervisor talk

- Intel Processor Trace

- Branch single stepping

- Interrupt/timer based sampling

Demo: coverage tracking

- Will show:

- Basic blocks in a disassembler

- Generating a patch file using an IDA Python

tests/ida_generate_patch.py - Gathering coverage info from output

- Visualizing coverage using an IDA Python

tests/ida_highlight_coverage.py

E#8 Enabling #BP interception and coverage tracking

- Change

tests/startup.nshto usesnapshot_patch.json - Expected result:

New BB#starts showing numbers.COVERAGE:appears in the log.INFO: HH:MM:SS, Run#, Dirty Page#, New BB#, Total TSC, Guest TSC, VM-exit#, ... INFO: 14:37:36, 2, 6, 8, 5361191, 3170, 15, INFO: COVERAGE: [dd2d8df, dd26544, dd24ea8, dd25cea, dd25cf4, dd25d0f, dd25d08, dd25f78] TRACE: Reached the end marker DEBUG: Adding a new input file "input_3.png_1". Remaining 3

Catching possible bugs

- Types of indicators of bugs and detection of them:

- Invalid memory access -> #PF interception and nested page fault

- Use of a non-canonical form memory address -> #GP interception

- Valid but bogus code execution -> #UD and #BP interception

- Dead loop -> Timer expiration

- Exploration of those ideas are left for readers

- The author has not discovered non-dead-loop bugs

Conclusion

This chapter wraps up the course and shows pointers to the next possible areas to explore.

Wrap up🎉

- We implemented a type-1 fuzzing hypervisor

- We learned

- how hypervisor sets up and starts a guest, and handles events from it

- how hypervisor virtualizes physical memory for the guest

- how hypervisor can inspect guest's behaviour to track execution coverage and catch possible bugs

What's next

- Study state-of-the-art research paper about fuzzing

- Find bugs in firmware and kernel modules by extending the implementation

- Write a hypervisor from scratch to solidify the understanding of the details

Resources

- Recommended bare metal (with serial ports)

- Intel: NUC11TNHi3 Full with 9-pin to DE-9P

- AMD: LLM1v6SQ

- Hypervisors

- Smaller projects:

- ACRN Embedded Hypervisor (Intel + Nesting)

- BitVisor (AMD/Intel + Nesting)

- SimpleVisor (Intel, Windows-centric)

- SimpleSvm (AMD, Windows-centric)

- As a fuzzer: See Introduction

- Tutorials (Windows-centric):

- Book: Hardware and Software Support for Virtualization

- 4-days course I offer👋

- Smaller projects:

- x86-64: OpenSecurityTraining2: x86-64 OS Internals

- UEFI

Thank you!

Thank you for taking the Hypervisor 101 in Rust course!

Please reach out to the author on GitHub if you have ideas to improve the course, or hit the ⭐ button if you enjoyed it.